In my last post I described a city builder using GPT. I was planning on putting a pin in that work with the post, but the heart wants what the heart wants and I found myself returning to that work.

In this post I’ll talk about generating images from the source material, chatting with your characters, creating plots, some more about the authoring experience, and the move to a more declarative implementation.

To get an idea of how this works here’s a video:

As a demonstration of the tool I’ve created Veilvale, a fantastical city powered by esoteric magic and a populace where everyone wears a mask as their identity. It has over 280 characters, 70 buildings and landmarks, and 450 images. I specifically mention its size because while it wasn’t easy to build, it also wasn’t that hard and I reached a level of completion that exceeds any other worldbuilding project I’ve attempted.

Table of contents

- Generating images

- Chatting with your creations

- Plots

- The authoring process

- A declarative approach to building

- Conclusion

Generating images

I equivocated on image generation in the last post. But images are great! And at least at this moment Midjourney is making the best generative images. What Midjourney isn’t good at is automation. But there’s no unattended image generation process that creates good results.

To make it more feasible I created a bit of automation. The whole city took about 55 hours of Midjourney CPU time.

Contextualizing descriptions

I have GPT create prompts from character descriptions. The request looks like this:

[all the world context]

[character description]

Give a description of the person in the form of a picture. Do not assume any context. Focus on the visual details. Use 3-4 sentences.

I could just pass the original character description to Midjourney. Midjourney is also happy to hallucinate details in the absence of a specific prompt, which is essentially what I’m asking GPT to do. Except GPT can synthesize the world description and the character description, and then pull out just the important visual details.

This is important! As an example I created a candy city where everyone was made of candy. An example character:

Caramel Elise (Council member): Caramel Elise is a shrewd and calculating candy who always has an eye on the bottom line. She is a caramel candy.

If I feed the character description directly into Midjourney I get these:

GPT translates her description into something more specific:

Full body digital art portrait of a candy person: Caramel Elise has a warm, golden-brown hue with a smooth, glossy surface. She has a rectangular shape and slightly curved edges, as if she’s been poured into a mold. Her expression is serious and determined, with a hint of a smirk on her lips.

And the result captures the all-important fact that she’s literally made of candy:

Character race

One as-yet-unresolved issue in character generation and image generation is a tendency to default to white characters.

This should be contrasted with gender, where by default GPT is very balanced in its gender representation. I’ve mostly left gender unspecified in the world descriptions and GPT will create a roughly equal number of men and women characters, and even quite a few characters who use “they” as their pronouns. I’m sure GPT would be willing to stereotype genders if I made world notes to that effect, but I appreciate a balanced default when it’s unspecified.

Typically the source character descriptions don’t specify any race at all, nor are there enough cues to guess at the race. What I think is happening is a series of choices that narrow the possible race:

- I ask GPT to make characters that are interesting and distinct.

- GPT’s idea of unique character descriptions includes lots of “piercing blue eyes” and “angular features”. (GPT has no interest in brown eyes!)

- GPT’s preference for hair color is also affected, with a preference for black, blonde, and red hair.

- Ironically I think GPT has such a strong preference for black hair over brown because that’s a more striking hair color on white people. It’s choosing the kinds of features an author would mention.

Many of these features effectively create a constraint that the character is white. Even if it picks black hair it may throw in blue eyes or freckles implying the person is white. Or GPT chooses red hair then any other attributes will still result in a white character.

Here the answer is probably not too hard: tell GPT to specify race explicitly. Especially if GPT starts with a race then it’s going to create features that align with that race. I’ve tried an initial experiment where I simply ask GPT to first specify the race as part of the visual description and it helps quite a bit.

Character attractiveness/etc

In addition to race, both GPT and Midjourney default to a few body types. Women and men are often described as slender, though men are occasionally muscular. Midjourney takes both of these to an extreme; here’s someone who’s just “a tall man with a muscular build”:

Or this might be a cool character study but it does not radiate gardener:

Lots of beautiful ladies

It can also be hard to make women who aren’t beautiful or sexy. For instance here’s Kiera Ironclad, who was given a description that only emphasized her strength:

Kiera is a tough and experienced guard, responsible for keeping the guests of the Masked Ballroom safe. She wears a mask made of steel, symbolizing her strength and reliability.

GPT’s physical description is consistent:

Kiera Ironclad stands tall and proud, with a muscular build and a stern expression. Her steel mask covers her face, except for her piercing blue eyes that seem to glow in the dimly lit ballroom. She wears a black uniform with silver accents, and a sword on her belt, ready to protect anyone who might need her help.

But Midjourney demands she be some kind of catwoman:

I tried to use “stocky build” to encourage an entirely different body type, and while Midjourney did tone itself down a little it definitely didn’t hear me:

In part it’s probably picking up on video game aesthetics. Stable Diffusion (v2.1) is only worse:

I thought I had discovered a better generation when another woman guard was giving a more appropriate portrait, only to find that GPT had changed this character’s pronouns from “she” to “they” when creating the portrait prompt. Unexpected, but effective:

In another example:

This person’s description:

Long, silver hair that falls in gentle waves down her back_ and she wears a flowing, white gown that glimmers in the soft light.

GPT went overboard with this description but didn’t actually give her any body type or age in the description. She comes out like this:

Like race it might be possible to tone this down by asking GPT to make specific statements.

Another possible cause is that Midjourney reads some fairly innocuous-sounding terms in a very specific way. For instance “glowing eyes” will make anything evil.

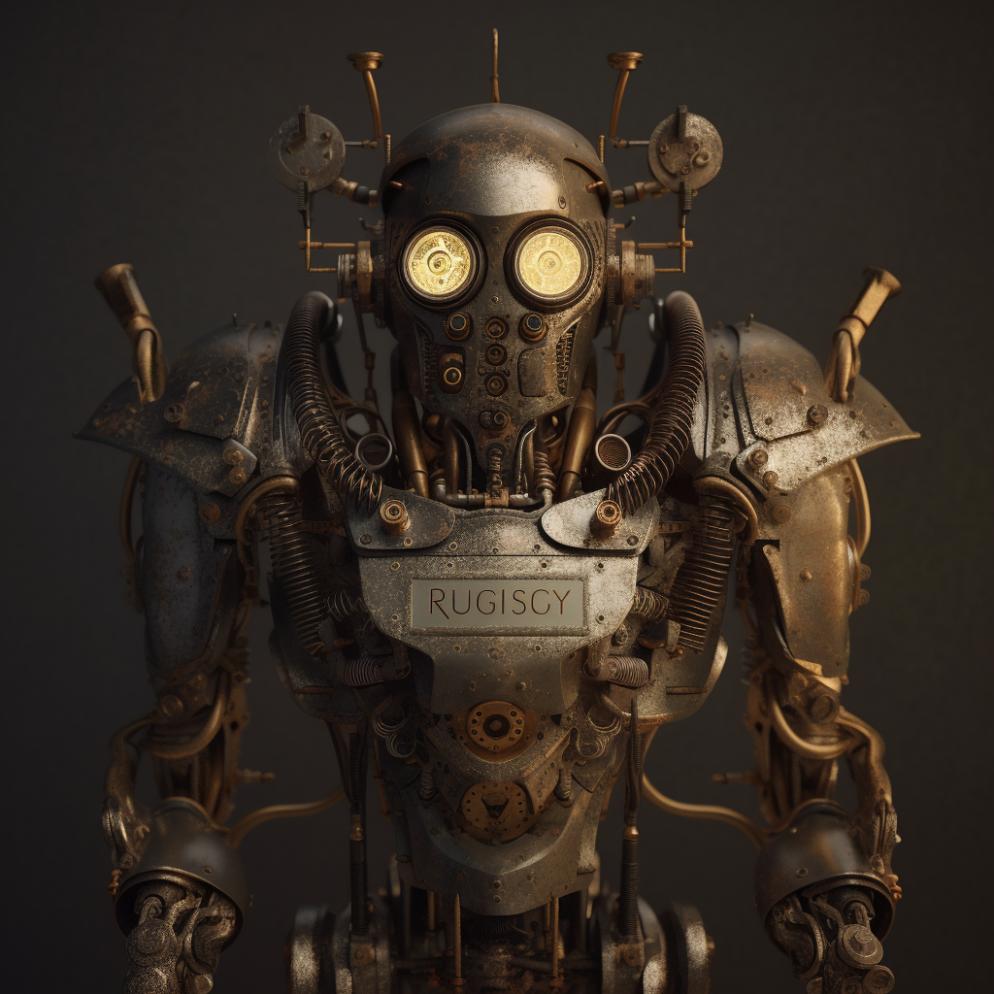

Here’s a character Iron Giant with glowing eyes:

And without:

So GPT may be producing terms that seem neutral but infer more than we want.

Another way to think about this: Midjourney, more than the other image generators, has a strong feedback loop of human preference. I suspect that’s why it has such a good default style, it’s not just learning to be accurate to the prompt but it’s also learning to make something compelling with the prompt. And what do people like? Beautiful people! That’s basically what “beautiful” means! In a single image I, of course, am not immune to the same appeal. But it gets old, incongruous, and lacks authenticity to an imagined world.

Everyone’s fancy

I have to accept some blame for this, I did imagine a fancy city where people wear fancy stuff, but Midjourney takes it too far. Midjourney likes fancy, everyone likes fancy pictures, they are very impressive. But not every person is impressive!

For instance, here’s Lorelei Moondrop. She’s a box office clerk; not a sorceress or etherial being, just a box office clerk:

The description doesn’t call for this:

The person in the picture is a young woman wearing a mask made of smooth wood. She has long, dark hair that cascades down her shoulders. She wears a simple, yet elegant dress that flows to her feet, with intricate patterns of stars and moons adorning it.

What I did wrong here is to ask GPT to only focus on the characters appearance, given the character description. And it does suffer from a bit of purple prose (purple prompting?), which Midjourney in turn picks up on (e.g., “simple, yet elegant dress that flows to her feet”).

I think I might do better to ask GPT to fill out a kind of template, like:

Race: [black/white/Asian/South Asian/etc]

Social class: [homeless/noble/working class/bourgeois/etc]

Job: $jobType

Job location: [where $jobType typically is held]

Visual description: [A description of the person in the form of a picture, set somewhere typical for $jobType. Focus on visual details and keywords. Use 2-3 sentences]

Then I give Midjourney only the visual description but having prepped it with a wider awareness of the character. In some early testing this seems to help quite a bit with male characters, but female characters are much more resistant to de-prettification.

Buildings

Buildings are a lot easier: no demographics or biases here!

In my last post I focused on isometric images of buildings. This time I went for street views:

There’s a lot left to explore in how buildings are presented. There’s a couple things we want to do:

- Instantiate the idea or description of a building in a concrete form

- Give a vibe to the characters and events in that building

- Create an anchor, a sense of place, something to remember

- Make the location feel alive

- Support navigating among these buildings

Street view gives some sense of entering the building. Isometric is great for navigation, and by normalizing buildings to an isometric format I think it can make them more approachable.

For being in a building you probably want an interior. But what makes that interior come alive? Not real, but present, concrete. I sense a problem where the eye just floats over these images, places, and characters. They are fancy, even dramatic, but not engaging.

I think there may be other, more structured ways to present these buildings. To make things comprehensible in terms of theme and variation. This is one benefit of character classes: you get a category to put someone in, then you can define them in terms of how they defy that category.

Kris Kashtanova shared a prompt that created level designs and it added another interesting lens on these buildings. Here’s the street view of Moonbeam Manor:

And here is its level design:

The second image is much less realistic but feels embodied in a much more concrete way. Even if you can’t walk around it, that interior image tells a story that an image from a realistic perspective cannot.

But you can use entirely different styles, exploring with only changes to the prompt text:

There’s many ways to tell a visual story, and a lot to explore here.

Chatting with your creations

One of the great things about doing stuff with GPT is that it’s a sort of everything engine. So once it’s set up to invent characters it’s also easy to add a chatbot or whatever else.

I added another step to turn a character description (with all the rest of the context) into a chat prompt, and then a simple chat interface. Here’s an example of the prompt built from a character:

Character description: Thorne Nightshade is a mysterious and enigmatic representative of the Shadow District in the Senate. He rarely speaks in public and prefers to work behind the scenes, using his network of spies and informants to gather intelligence on his rivals. His mask is made of black leather and has a slim, pointed beak that resembles that of a crow.

Chat prompt: Thorne Nightshade is a representative of the Shadow District in the Labyrinthine Senate. He is known to be a mysterious and enigmatic figure who prefers to work behind the scenes rather than speak in public. He wears a mask made of black leather with a slim, pointed beak that resembles that of a crow. If you were to speak with Thorne, he may speak in a hushed tone and ask pointed questions to gather information. He is highly observant and analytical which can make him appear unapproachable at times, but if he deems you trustworthy, he can be a valuable ally in navigating the political landscape of Veilvale.

Combine that with some context and instructions and you get a surprisingly good chat! By creating a chat prompt you are asking GPT to infer more conversation-related details; perhaps it could infer these directly from the character description, but those inferences may not be stable. By generating and storing a prompt the personality becomes stable.

One important addition is that after every user message I append the user’s actual message with “Respond as the character [name]”. This is effectively a high-powered reminder to stay in character. Note that GPT has no real history, and so I only append this to the user’s latest message, leaving it off of earlier messages.

Here’s an example chat with one of the city’s automatons, an abrasive character that is still dedicated to keeping the city in good repair:

Player: hello there

Rusty: Hmph, what do you want? Can’t you see I’m busy here?

Player: I want to know the way through the maze

Rusty: Bah, the maze? That’s child’s play. If you can’t figure it out yourself, then you’re not worth my time. Besides, I’m not here to hold your hand and guide you through every little obstacle in this city. I have more important things to do, like fixing broken machines and keeping Veilvale’s technology up and running. So if you’ll excuse me, I have work to do.

Player: I have to get through the maze to fix an important machine

Rusty: Oh, I see. Well, that changes things. If you need to fix an important machine in the maze, then I suppose I can offer some assistance. But don’t expect it to be easy. The maze is designed to be tricky and not everyone comes out unscathed. However, since you seem to have a sense of purpose, I’m willing to help you out. But first, I need to know what kind of machine you’re fixing and what tools you’ll need. Give me the details and we can start planning our strategy to get through the maze.

Characters floating in space

While it’s cool to chat with the characters, it suffers from the same problem of most GPT chatbots created for entertainment: nothing matters.

I picture it like the character is sitting in a featureless room, expression blank, no desires or plans, simply waiting. You sit down across from this character, it looks at you but says nothing, just waits.

“Hello,” you say. The character lights up, engages you in discussion. But the character will not leave the room, will not leave the chair. They will act as though they are real, as though everything you say matters. Until you ask them to leave the chair. Maybe if you try hard enough they’ll even agree to leave the chair. But they will never leave the chair.

When you are done you stand up. The character still sits, eager anticipation on their face. As you leave the room you look back and they are still there, making eye contact with the void that you have left, poised and always ready.

At first it still seems fun, but the not-mattering will drain you.

This is not fatal, GPT can simulate arbitrary actions or perhaps judge specific criteria in the conversation. But while it’s easy to build an isolated experience, these meaningful experiences are more difficult. But they’ll be worth it!

Chatting about… anything

Something my daughter came upon while playing around with the city: she happened into the Academy and wanted to learn about her astrological sign. And because GPT is an everything machine it actually knows all about that (though it’s cagey on making real predictions). I realized it could also teach math or anything else right in that same interface. It’s not free to do this, but the latent ability is there with some effort. And so you could have an in-game school where the player could learn and demonstrate real skills.

Plots

Things happen in an embodied world. This world definitely isn’t embodied yet, but it feels like there’s opportunities to move in that direction.

With this in mind I have tried a couple plot generation approaches…

Building plots with: summary, selection, events

In this model GPT makes suggestions at different levels. First it offers rough outlines of a story:

The Missing Heir: The heir to the Labyrinthine Senate has disappeared within the maze, and now the Senate is offering a reward for their safe return. However, rumors say that the Razormaws are behind the kidnapping, and the Cult of the Moon Goddess is secretly aiding them.

Once you’ve selected a plot GPT will take the summary and a list of all the characters, producing a suggestion of some characters and their roles in the story. Everyone gets a plot-specific role, but it can also create entirely new characters to serve plot roles, for instance:

Eliana Silvermist: Eliana is a young artist who is enamored with the legend of the Night King. She is unwittingly drawn into the cult’s plan when they mistake her for the missing heir.

Then less interestingly it picks out some relevant locations. This gives GPT a smaller set of things to work with as it develops the actual events.

The event list is where a plot really develops. Right now it’s listed as a kind of straight list of events for you to pick and choose from. That’s questionable, and assumes freestanding events that tie into an arc but don’t tie into each other. But that still works okay… the character descriptions create a framework that GPT fills in.

The Night King’s Lair: The investigators discover the Night King’s lair within the maze, filled with strange artifacts and esoteric books.

Characters: Thorne Nightshade, Aurora Sky

Locations: The Lost Grove

Time: 10 days

I can imagine applying these events to the world on a kind of schedule. If the world just ticks through the events then we’re saying the player has no real agency, events will transpire inevitably. But if this event generation is automated at a high enough quality then we can synthesize new events based on player action and ask GPT to again complete a new set of events. I think by looking ahead, even if it means creating events that will never come to pass, we can make GPT create both a satisfying arc and conclusion, and remake that arc and conclusion in response to the player.

Murder Mysteries

My daughter suggested this after doing some chats with the characters, imagining that she could grill people and then accuse someone of being the murderer.

Murder mysteries are appealing because they have a very clear setup (a murder!) and a moment of obvious player participation: soon after the murder is discovered, with only intermittent action outside of the investigation.

In my first attempt I asked GPT to write a muder mystery, but (as is typical with GPT) it didn’t know where to stop. It invented detectives and red herrings and an action packed conclusion. No need for a player! But that’s not what I want.

So instead I kept it to the facts, with GPT as the omniscent narrator. Identify exactly who did it, why, when, how; identify other people with a motive; identify events leading up to the murder. I give it a template to fill out, avoiding a narrative voice.

Victim: Marina Rossi

Murderer: Hector Stone

Means of murder: Poisoned wine

Location of murder: Marina’s Crafts

Time of murder: 9pm

Motive: Marina discovered that Hector had been sabotaging The Stonemason’s Workshop, which had resulted in several accidents and injuries. In order to silence Marina, Hector murdered her.

Description: Marina was having a glass of wine after closing up her shop when Hector entered. They were talking for a while, and Marina suddenly appeared to be feeling ill and collapsed. Hector quickly left the scene.

This goes on at some length, fleshing out a wide variety of facts in one go. The intention here isn’t to have the author/user do much, but instead create a hands-free automatic murder mystery.

The factual source material then gets parsed in a second pass, pulling out specific information that needs to be used to construct later prompts, such as the victim’s exact name.

The last (still poorly implemented) step is to indicate what each character knows. That is, all the subjective knowledge that the player will piece together to discover the truth. Maybe this alone is enough for a playable game?

Eira Trefor: knows that Marina confided in her about her suspicions of Hector and sought her advice at The Seafarer’s House at 6pm on the day of the murder. Eira advised her to speak to the authorities and not to confront Hector alone.

Vladimir Kuznetsov: witnessed Marina receiving a gift from an anonymous sender (a bottle of wine) at her shop an hour before the murder, but did not see who the sender was. Vladimir may also know about Marauder Jones’s thieving tendencies.

I’ve only used GPT-4 sparingly on this project, but there are steps in this plot generation where it might perform significantly better, especially when given a large task.

While much of the authoring experience is the same as I wrote about before, there are things I noticed because I generated so much more world.

The Rule Of Cool

The Rule Of Cool:

all but the most pedantic of viewers will forgive liberties with reality as long as the result is wicked sweet or awesome

The authoring process is one where GPT throws out a bunch of ideas and you pick the ones you like, over and over. If you don’t like the ideas you can add suggestions, even simple suggestions like “make it weird.” I did this a lot!

As a result GPT is constantly tempting the author with awesome stuff. I happily went along…

Some of the things GPT tempted me into:

- A desert neighborhood called Shifting Sands where shape-shifters practice their arts. I don’t know where these shape-shifters came from, but that sounds awesome! Later I would realize that you can’t fit a desert inside a city.

- Bioluminescent Bay, that’s cool right? Again, physically unclear, but how can I say no to glowing marine life…

- It all started with this prompt: A city in the center of a maze 🌀, where travelers often lose their way and the locals always wear masks to hide their true identities. That sounds awesome! But integrating a maze structure is very hard and got lost in the generation. (I went long on masks though.)

- Silver Haven: for those who seek refuge and peace, a sanctuary where automatons are created to aid those in need. Robotic therapists seemed pretty on point for this moment so I couldn’t pass it up. But it ended up as a kind of bland retreat.

GPT does not roll fair dice

This authoring process relies on GPT coming up with unique and creative ideas. It’s pretty good at this, but what it does well for one attribute can feel repetitive when it happens over and over.

For instance in Veilvale there are 29 people (out of 370) with the last name “Nightshade”. It’s a cool name I suppose, but not that cool. In another experiment Colin Fraser asked GPT for a random noun and it returned “Pineapple” 11% of the time.

The cool thing with something like name generation is that it can create punny combinations, like Sage Pepper for a cook, and other less obvious names that still rhyme with the spirit of the character.

But sometimes we don’t want randomness so much as distribution. It’s not to say that we only ever want one distribution, but just a conscious explicit distribution.

Also there should only be one, or at least no more than a couple “best cooks”. GPT isn’t the only one who is drawn to hyperbole, so it’s fine if some things are overstated, but you can’t make every character exceptional. You can still make every character unique! But you need to make them unique on a variety of spectrums.

I’m not quite sure how to resolve this. Maybe pre-roll some core things and then seed the prompts with the results. But I don’t have any clear answer for the hyperbole.

People existing in and out of a context

Because of the hierarchical composition process all people “belong” somewhere:

- Factions have faction members. Faction members are created specifically in the context of their one faction.

- Buildings have occupants. The person is built to occupy a building.

- Plots can add new characters that serve a purpose in the plot.

An alternative might be to create a pool of people and then assign them, adjusting descriptions to fit their new home. Maybe that would be better, but I think giving GPT the freedom to create characters for a specific purpose is the right choice.

But it’s not the right choice that it ends there. A few problems:

- People don’t relate to each other. When GPT creates a single list of characters it can and does relate them sometimes, for instance introducing a leader and an assistant. But that’s just an accidental feature. Density of narrative is a feature that’s missing from this process, there aren’t enough loops, enough serendipitous relationships. Even in a large city you should keep bumping into the same people for different reasons. That might not be realistic but it’s enjoyable.

- There’s no one schema for people. People in buildings have a property that relates them to the building (like a job). People in factions have a role. “Protagonists” (narrative free agents) have a heroic archetype. I don’t think we want to overspecify people and demand that all attributes be filled in, but it makes the coding harder when each character is only half-filled-out.

Dustin Freeman gave me the idea of doing this as a kind of fix-up: generate a cast of fully situated characters, but then start to add new properties and relationships. I’m imagining something like:

- Create a list of characters. If there’s too many for GPT to process then just sample a few randomly.

- Ask GPT to suggest the basic outline of some relationships. E.g., “Tom and Eileen are brother and sister”. Let GPT pick and choose who to match. Maybe the author selects which relationships to keep, or maybe they just scan and mass accept.

- Represent the relationship formally somewhere.

- Go back to each person and rewrite their description to include the relationship.

This could also be done with characters and factions (so building occupants can belong to factions, factions can own buildings, or people can belong to two factions).

I’ve done something like this experimenting with “faction relationships” (like, inter-faction squabbles) but it falls flat, usually explaining obvious tensions between factions. Maybe I’m doing it wrong, or maybe there’s not enough material in that particular combination. (Maybe I need to focus on inter-factional histories instead of relationships?)

GPT 3.5 vs GPT 4

The tool is built to default to use GPT-3.5 (the gpt-3.5-turbo API) for all requests. Lots of people are jumping on GPT-4, and I’ve now done some work with both, including places where 3.5 definitely wasn’t sufficient for the task. But here I’m quite happy with GPT-3.5.

My general intuition is that GPT-4 is able to connect information across greater distances and to simultaneously follow a larger number of directions. This does come up, instructions can get lost as they accumulate. I think there’s ways to solve that with 3.5, such as refining generic/universal instructions to just those applicable to certain domains. For instance some instructions only apply to buildings (like building material), others only to character generation (like demographics) and so on.

The overall development pattern for GPT-4 might also be different than 3.5. There’s a lot of architecture that is there implicitly to keep down the size of any one prompt and response. With GPT-4 it may be possible to accomplish much more in one prompt, both because of a greater confidence in the output and because it’s able to handle more complexity in the request.

That said, GPT-3.5 is pretty great and I’m reluctant to just switch to something that’s bigger/better/slower/more-expensive.

A declarative approach to building

Inside the spaghetti code of City Maker was a much simpler idea waiting to come out, and this version is a complete rewrite of the previous code. I gave it the name LayerCraft with City Maker as a schema. I don’t know yet how widely applicable this approach is, but it feels like it’s touching on patterns will be relevant for LLMs.

I’ll try to explain it concretely using a city:

- There’s many properties: name, factions, people, neighborhoods, buildings

- Some properties belong to others in a parent/child relationship, like buildings that belong to neighborhoods

- For any property GPT can generate options

- Generating these options requires a prompt; these prompts usually require context which creates dependencies. The prompts themselves are templates, dependencies are the substitution variables

- Once a prompt can be fulfilled GPT is immediately asked about it, the author can make choices, commit choices, and thus produce new properties ready to be filled out

In practice for the City Maker this means you choose a theme for your city, then backstory, then you pick out some neighborhood types that are created based on that background, and so on.

The prompts themselves are constructed to elicit the best result from GPT. Good prompts:

- Give the necessary context without giving too much distracting context

- Show concrete examples that neither over-specify nor under-specify the desired results

- Give GPT broad guidance (“exciting”, “simple”, etc)

- Identify the schema for the results

For instance, the schema for building occupants:

The city $cityName that is $cityType, $cityPeriod. $cityBackstory

A list of $building.jobTypes and other inhabitants for $building ($building.description). Give each person an interesting and culturally appropriate name and a colorful background and personality. Include negative attributes.

Respond with a JSON list like:

[

{

type: $building.jobTypes|first|repr|json, // Or $building.jobTypes|rest

name: "FirstName LastName",

description: "[a description of the person, their profession or role, their personality, their history]",

age: 30, // age in years, 5-100

arrives: "8am",

leaves: "6pm",

}

]

The building schema includes jobTypes which informs the example. The prompt could just offer some generic advice, but I’ve found there’s often an advantage in establishing options as part of the context.

The particular implementation of this in LayerCraft isn’t half as general as this needs to be, but I’m very optimistic about the approach.

Updates and dependencies

Building up incrementally gives steering power to the author, but not correction. This is a common problem in the authoring process because it’s hard to know the results of some of those early choices until you’ve seen what they’ve produced. And if you are creating dozens of characters based on these prompts you might not see the problems until you are halfway in.

If we treated this process as a pure question of dependencies, and treated the schema-defined graph like a Makefile, then changing early choices would simply regenerate later choices. But the author probably liked many of those choices!

As an example: I created a candy city. Part way through I realized it would be more fun if the characters were each made of candy. I didn’t really want to change everyone’s role, but only to make sure each description said what kind of candy the person was. I added an instruction to the backstory: “the people of Gummytown are made out of candy, with some being gummies, others being chocolates, and a few being marshmallows or candy canes. For each person specify what kind of candy they are in their description.”

How do you tell which properties this applies to? How do you apply this change to those properties? GPT is capable of both of these things, but not in a deterministic or entirely reliable way. Fixing this is significant work and definitely needs to be present in the UI itself.

Getting meta in the prompt

The use of context (such as backstory) lets the author broadly control what’s being created, but it’s often underpowered. It also can’t change the shape of what’s returned, such as adding a new property to a JSON example.

You can do a lot with GPT prompts and concatenation. That is, you can “edit” a prompt by just adding more instructions. If those instructions conflict then GPT will try its best. This is usually enough, and why this entire approach works.

As an example where this worked: I added the request “describe each person’s mask” to Veilvale person creation. It listened and consistently did so. But when I tried to give the instruction: “make it a list of automatons” it balked, confused by a previous instruction which clearly asked for people and automatons are not people.

A next level is to put more of the core prompt material into properties. Yet the text of those prompt templates quickly turns into programming itself, a mode switch that’s hard even for a programmer if you aren’t in the driving seat.

If we turn back to GPT again to solve this we’ll need meta-prompts: descriptions of what the prompt needs to accomplish, and its constraints, so that GPT can apply natural-language edits to the prompts while maintaining the required structure.

Planning & action

While this is author-focused, I think the choices that GPT produces should be thought of as proposed plans. In City Maker it’s offering plans for how to build out a world. The author chooses options (and edits and makes notes on options) to guide, correct, and expand on what GPT produces on its own.

If GPT was producing code instead of options that’s also a plan. Sometimes you can build plans on plans, i.e., build more code just by looking at existing code. But often you need the output of that code to keep going. Or you need feedback, refinement, etc. I think there could be a pattern:

- The author specifies the goal

- GPT proposes up to the limits of that specification

- The author previews the results

- The author adjusts, selects, refines the results, perhaps through choice, through dialog (chat), refined specification, etc

- The choices are committed, expanding the specification

- Any unresolved actions can be taken, code run, output collected, etc

- Repeat at 2

Unfortunately this doesn’t cover revision, as mentioned in the previous section, and I don’t know exactly what adding that to the loop would look like.

I’m glad fine tuning is dead (for like a month)

It’s tempting with these problems to think about “fine tuning” a model. That’s how AI has worked for a long time, and it feels like a good way to approach issues with reliability or ever-growing prompts that keep adding little instructions and caveats.

For now the fine-tuning option is off the table if you are using the gpt-3.5-turbo (what this uses) or gpt-4 APIs, which are chat-based and offer no finetuning. This probably won’t last for long, but right now you’ll almost always get better (cheaper, faster, more flexible) results by using these new models and forgoing fine tuning.

This is the future I want to see! Everything I’ve described here is not compatible with fine tuning. You can’t edit prompts and make sophisticated adjustments when the instructions are in the tuned model weights!

Prompting is artisanal AI. If you know something about a subject, and want to use that knowledge and your own sweat to iterate on a prompt, there is tremendous potential in prompt design. Prompt design isn’t enough right now because tooling is still limited, but prompt-focused tools can give people new agency on a very individual level. I’m developing both sides of LayerCraft (the tool and the prompts) and I still feel empowered by this abstraction.

When proposing prompt-focused functionality lots of people are all like blah blah blah, what about a moat, how do you differentiate yourself? It’s so self centered… there’s a kind of thought that is addicted to consuming people rather than empowering them. Empowered people don’t lay down and play the part of being your moat.

Conclusion

It’s hard to conclude this because this is just one step along the way. (To where?) Some things that stand out to me:

- GPT prompts matter. It’s not just a matter of getting the prompt “right,” it’s iteration and exploration over a specific domain.

- When a user can enter text to affect a prompt they are also prompt engineering, and that’s the magic of this technology.

- These same things are true for image prompts, but without the complexity of recursively applying output to future inputs.

- Natural language is now a data structure. We’re probably all going to struggle to internalize this for a while.

- Steerability makes GPT a lot more fun than just gazing at its wise output.

- Chat is not the only way to interact with GPT.

If you want to try this yourself check out llm.ianbicking.org, or check out GitHub. If you are curious about the exact prompts look in citymakerschema.js. Note that this is a personal project, it’s built for personal exploration.

Similarly: when using the site be warned I have not built it carefully for other people to use, stuff will be weird, errors will appear only in the JS Console, there are no promises. And you’ll have to bring your own GPT API key!

Comments welcome on Mastodon or Twitter.