I’ve been making some experiments with roleplaying and LLMs (Large Language Models, such as ChatGPT). After a lot of coding I think it’s time to step back and think about what I’ve done and what I might want to do.

In this post I will describe some of the techniques I’ve used to improve LLM character behavior, larger experiments I’ve made, and then a long list of thoughts and questions.

This post assumes you have some familiarity with LLMs and constructing prompts (but I tried to give the tiniest introduction).

Table of contents

- What is a prompt?

- Roleplay as chat…

- Larger world experiments…

- Thoughts and open questions

- Conclusion

Terms

Gamemaster: the LLM role that directs the story by mediating player actions, describing how time advances, etc.

Feat: Some action that is hard enough you might fail, generally requiring a roll with a random chance of failure or success

What is a prompt?

I’m going to be talking a lot about prompts, and I want to be very clear about what a prompt is. I’ll talk about ChatGPT specifically here, but the other LLMs are all similar.

A request to GPT is a series of messages (plus some other uninteresting parameters). Each message has a role, one of: system for messages from the developer to GPT, user for messages from the user, and assistant for messages created by GPT.

So a prompt might start out as:

system: Play the part of William Shakespeare. Do not break character. user: How is it going?

GPT does not have any memory of its own. Everytime you send a message to GPT and get a response it is responding from scratch. But if you send the whole transcript then GPT is good at faking it. So once you send a second message the prompt will look like:

system: Play the part of William Shakespeare. Do not break character. user: How is it going? assistant: 'Tis going well, my noble friend, for my quill doth dance upon the page with words of love and longing. user: What are you writing?

That is, it will submit the entire history, asking GPT to produce the next “assistant” message.

Some things to note:

- If you ask GPT why it said something, it will look at the transcript and imagine why someone might have said that thing. It doesn’t have access to any past thought process.

- Everything in that prompt is under the control of the developer. The developer can put words in the assistant’s mouth, leave things out, or do anything.

- The last user prompt is very important, it’s what GPT will respond to directly. Like everything else the developer can put whatever they want in there, it doesn’t have to be what the user typed. I almost always use that last message for instructions with the user input wrapped in some context.

- You don’t have to maintain any history at all! You can make every request from scratch, including only the information and instructions you want.

- You don’t have to show the user exactly what GPT returns.

- Everything is part of the prompt: the beginning, all the intermediate messages, and the final message (even available functions are best understood as prompts). The LLM pays attention to all of it (though not equal attention), and there’s no clear distinction between “instruction” and “information”.

So when you see me talking about prompts imagine each prompt as a hand-constructed series of messages, which may or may not include history, and which is designed only to get the right response from the LLM.

Implementing simple chat

To set the scene I will describe the basic progression I’ve gone through with LLM-assisted roleplay:

The easiest thing is just to set the system prompt and chat away! This works wildly better than anything from 2022, but it’s still just OK. This is mostly equivalent to pasting a roleplaying prompt into chat.openai.com

If you want to improve the chat itself – keep it from breaking character, make it act more decisively, give the character desires and goals – then there’s some techniques that help:

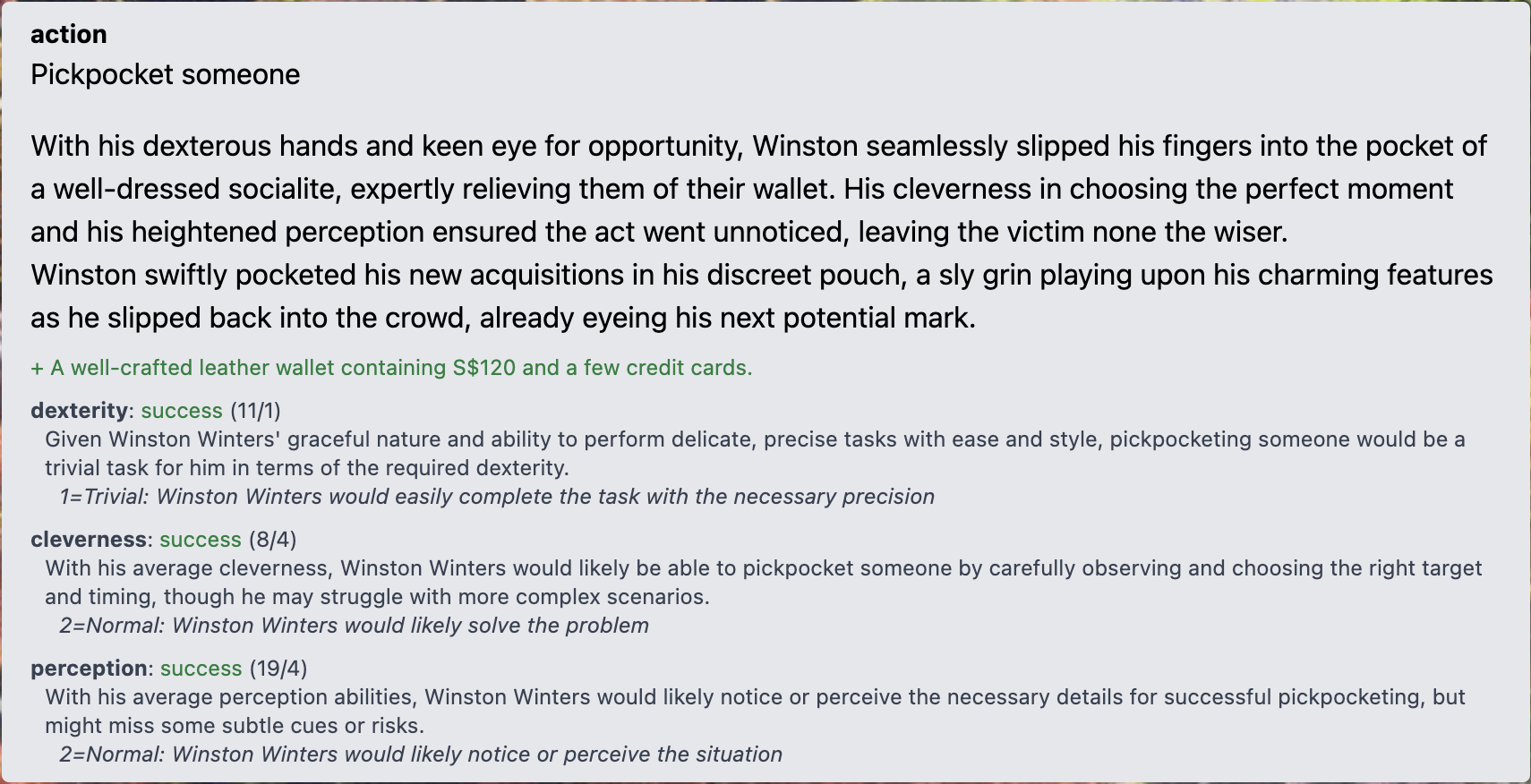

- Make it collaborative dialog writing instead of interacting directly with LLM. This means a system prompt that describes the exercise as a collaborative dialog writing where the user is “writing” a character and the LLM is “writing” the other character. If the player types “hi, how are you?” then you put something like: “John Doe says: hi, how are you?” in the prompt.

- Include instructions on how to respond at the end of the prompt, not just in the system prompt. Repeat the most important instructions in the beginning and end of the prompt and give pointed instructions on how to respond.

- Generate internal dialog or an internal thought process in addition to the normal dialog. Give the LLM room to think. (I learned this from Open Souls.) This means the LLM will write “thoughts” that you won’t show to the player.

- Summarize periodically (replacing the older transcript with the summary) so that the context stays more limited and fresh.

- Think hard about what attributes you want to capture in that summary. The summary is a chance to focus the LLM on the most interesting aspects of the conversation: conflict, attitude, notable events, forming opinions on the player character.

- Initialize the chat with a summary to set the stage. Even if the summary is sparse it will help focus the LLM on what you (the game developer) has decided to make the focus of this interaction.

- Compress dialog to remove some of the idle chatter and repetition that tends to arise, again keeping the context on-point.

These all require some coding (though it’s not very complex), and you can’t do them in a Custom GPT.

The prompts you use for each technique is also important. Prompting matters!

To see some of these approaches (and others) you can look at this example of a full prompt. It’s long!

Situated chat

All those techniques are helpful but the scenario is still weird with an entity floating in the ether until some nameless faceless entity known only as “user” comes up and says “hi”.

So the next thing you try is creating a setting. Name and describe the player. Create some sort of purpose to the conversation.

As an example of this I compared my William Shakespeare chat to Khanmigo’s, to give some sense of what both setting and good prompting techniques can do compared to Khanmigo’s apparently bare system prompting.

Activity alongside dialog

A quick extension is to add scene and action descriptions. Once you’ve set this up as a kind of collaborative writing process this is as simple as asking descriptions to go in <description>...</description> (and then displaying them appropriately).

Now you have some action, a setting that can be updated by keeping track of the changes in the descriptions, and potentially even consequences. But vague consequences: relying on the stream of descriptions to keep track of the world state is kind of mushy.

Saying goodbye

All that stuff above is cool, but if you’ve ever used one of these LLM chats you’ve probably come to the point when a conversation should end… but it can’t. You can never stop talking. Like ghosts cursed to a never ending conversation, or Minnesotans.

It’s not hard to have the LLM signal that a conversation should finish. But then what? If the player was in conversation and now they are not, then where are they? Something as simple as goodbye requires a whole world to return to.

Hallucination and (lack of) memory

One thing I didn’t mention as an enhancement is Retrieval Augmented Generation (RAG). You might think of RAG as “looking up information/memories and giving them to the LLM”.

A RAG system includes context in the prompt based on what seems most relevant at the moment. This might be conversation history that wouldn’t otherwise fit in the context or world details. (To see an example of roleplay-focused RAG using very simple retrieval techniques: SillyTavern Lorebooks)

I haven’t mentioned RAG because I’ve never implemented it, and feel little motivation to do so… it’s just not that interesting to talk to a character for that long, or have details perfectly recorded.

I find guided summarization is sufficient: periodically (when the transcript gets big enough, or there’s an event like a goodbye) have the LLM summarize the conversation up to that point, and then use that summary in place of the earlier transcript.

But summarization does lose details: did either character learn specific facts during the conversation? Did someone describe a specific memory or personal history? The LLM will readily (and helpfully!) hallucinate all kinds of details, but without an explicit memory it’s all only as real as a dreamstate.

(This will come up again in a later section.)

A historical experiment

Every previous step seemed clearly additive to an experience that led only as far as a dissociated conversation. That’s not to say I fully explored singular conversations, but I had some other things I wanted to try…

The first idea I pursued is also the more ambitious: I really liked the idea of play-acting an entire life. In particular by roleplaying pivotal or symbolically important moments in the character’s life.

This idea took root in my head early when experimenting with GPT Choose Your Own Adventure authoring. GPT suggested a scenario that began with the player receiving a diagnosis for a terminal illness. Do you (a) call a family member, (b) take a walk to clear your head, (c) ask your doctor more questions. It feels absurd, but GPT was 100% straight-faced about the whole thing, and it didn’t feel absurd at all.

The idea of roleplaying serious rather than just fantastical scenarios stuck with me, and I thought about some of the choices and situations that we encounter through our lives as a source of material.

I imagined a few kinds of scenarios:

- Making a life decision: what profession to pursue, who to marry, and so on.

- Finding what’s important in your life: politics, art, family, etc.

- Expressing your personality: how you react, how you interact with others, how you respond to loss or success.

Because this would involve multiple scenarios over decades then you have to think about the character changing… but also the world changes around the character. Then you have to think about birth years, and locations, and then it turned into a historical roleplay. https://youtu.be/u49KwijAwm8

That in turn leads into a whole digression with selecting a place and time in history, a character, a family, status, and so on… all of which is a fun little character creation game of its own, but still leads back to those pivotal moments.

What does it mean to play out these moments? That I haven’t figured out.

Specifically I’ve found it hard to create a compelling moment that is worth roleplaying, where the player can feel real autonomy and drive to accomplish something in the roleplay.

One of the problems is more technical: I’ve created an environment where the player has unlimited ability to engage in dialog, but physical actions are at best vague and dreamlike.

The freedom of dialog mirrors the real world: we are truly capable of saying just about anything at any time. We don’t exercise this ability, but it is entirely our choice. Unlike dialog we cannot physically do anything we choose. Though realistically we are as unlikely to exercise our physical freedom as we are our verbal freedom. The player as a character is divorced from the fullness of being that is a real person, they don’t know fear, shame, awkwardness… all of which is a feature (encouraging exploration) and yet makes it very hard to simulate. There’s not much structure.

Some other questions I have about creating good scenarios:

- Am I giving the player the ability to exercise their preferred alignment via the character? To be a hero, villain, etc?

- Does the player want to be tested? And in what way?

- What pushback is fun? What is unwelcome? In other words, how freely do I allow the player to act? How much will the system disallow a character to make unreasonable actions?

- If the character exemplifies something (deviousness, intelligence, ignorance, etc), to what degree is that something the game should do, vs the player? If you are a well educated Babylonian merchant is it a requirement of the play that you actually become well educated for that time?

- Physical activities have some of the same questions… how many rules do we handle with the gameplay (like locations, entrances, objects, etc), and how many are conjured into being in the narrative? Does the player directly perform the actions (mashing buttons) or do you let the narrative and dice rolls direct the outcome?

- If the player enters text, what voice or frame is that text? Are they speaking as the character? Describing an action? Describing a request, such as asking for an extended description? Which of these happen effortlessly? (I.e., with no gamemaster mediation)

A game mechanics experiment

I felt/feel stuck on the first project, without any clear theory for another version of the gameplay. And perhaps I had too many expectations about what I wanted to accomplish in that game.

I decided to take a step back and work on something more relaxed. I had created an imaginary city builder a while back (part 1, part 2). I personally still find the tool fun and I like the building process, though the tool is weird and not really accessible to other people.

Using one of these cities solves one of my problems: where are you once you’ve said goodbye? So I started putting together another similar game with a more fantasy tone and with a pre-existing set of locations and cast of characters.

There are three notable parts I experimented with:

- Intent parsing: this separates the input into dialog, intent to do an action, and a few direct commands: movement, ending the conversation, and inspecting something. The action types in turn invoke different LLM prompts and pipelines.

- Ability, stats, and action resolution: the LLM determines what abilities are needed to perform an action, and at what difficulty, and then between LLM calls the dice are rolled and the LLM determines the effects given the success/failure results.

- Some ad hoc but updateable attributes like inventory and injuries.

In this game you can walk around, do actions at about any granularity (“search for a fallen nickel” or “take over the world”), and talk with the characters.

The three things fit together:

- Intent parsing clarifies what prompts to invoke and separates simple actions (like saying something) from feats.

- The action resolution handles those feats.

- Updatable attributes make the resolution feel more sticky, like “real” things are being updated as a result.

Action resolution is the most interesting and takes several steps:

- Player skills are represented with natural language, such as “feeble”, “weak”, “strong”, etc. (Here’s an example of the complete structure for a couple skills)

- This doesn’t offer a lot of granularity (4-5 levels), but LLMs don’t operate well simply on numbers without some kind of rubric. Instead of creating a rubric that will struggle produce numbers I decided to make the rubric the canon.

- Given an attempted action the LLM will determine what skills are relevant (knowledge, strength, speed, etc) and begin to think about how challenging the task is in terms of this skill.

- The LLM tends to give a longer list of skills (2-3) than a normal gamemaster (who will usually choose one). Maybe that’s too many, or maybe it’s just right?

- Analyze the difficulty of the action with respect to each skill. I.e., for this character will the action be trivial, normal, difficult, very difficult, or impossible.

- Now the granularity is even worse! The two ends (trivial and impossible) are not very interesting, so there’s really just 3 levels of difficulty.

- And then how often should these succeed?

- The game rolls dice and based on the roll and difficulty it assigns success/failure (maybe a critical) to each skill.

- The list of success/failure is given to the LLM and it is asked to describe the results of the attempt, including concrete results like gaining or losing inventory or being injured.

It’s fun to see the breakdown, the success/failures, and the ultimate effect of your action.

Still the game notably lacks a point, there’s no goals, you just wander interminably. Also the world you inhabit is static, there are small and limited updates but most action is forgotten and inconsequential at the code/persistence level.

Thoughts and open questions

Given all that, I’m going to write down a big list of thoughts: questions I have, ideas that might work, things to look into.

Action and resolution

-

In my own implementation I make a strong distinction between feats that require action resolution and simple actions that happen automatically/without failure.

- Should a gamemaster step always happen? For example moving around is usually uninhibited, but while you can leave a store easily it’s not so easy to leave a jail.

- I’m not worried too much about the prompt overhead (cost and latency) of the gamemaster step… I assume both that this will get faster and cheaper with time, and that once I have something I like then there’s more reason to optimize.

- Also I have no idea what I’m going to do with any of this, so what am I even optimizing for?

-

Given natural language descriptions of skill levels it’s hard to have more than a couple levels, such as feeble/weak/average/strong/powerful; usually 4-5 levels.

- The number of levels is not the same for all skills. I only have 4 levels of cleverness, 5 for knowledge, 4 for dexterity… and sometimes “average” is level 2 and sometimes level 3. But I don’t think it matters, there’s no real need to normalize these.

- Because of the limited levels there’s big jumps, and the probabilities for action resolution are hard to balance. It feels like there should be some mathematical modifier on top of the descriptions. Like you might have a dexterity of “nimble” but it can be +1 nimble which isn’t as good as graceful but is better than +0 nimble.

- The finer points of the math don’t feel as important when the LLM itself is quite arbitrary. I’m not sure if a human gamemaster is any less arbitrary, it’s just easier to ask the LLM the exact same question multiple times and get different answers while the human will stick to a decision.

- Creating the levels was a GPT-heavy task. The result (excerpt) is about 400 lines of JSON, and is a good example of AI assist: I don’t want to hand over all those distinctions to a LLM, but it was a big help in expanding the structures and brainstorming terms and phrases.

-

The ability to spam action attempts may make it too easy to make big swings and eventually hit. You aren’t just swinging for a favorable roll of the dice, but also a favorable analysis by the LLM.

- Human gamemasters use their memory and a sense of annoyance to stop this.

- Another approach is an escalating cost of failure, so that if you fail multiple times in a row the results become more dire. Though a quick hack around this might be to attempt trivial feats (“I chew gum and walk at the same time”) to break a sequence of failures.

- Or just increasing the consequentiality of all attempts; make failure real and sticky. Even ask the LLM to come up with consequences that specifically punish multiple attempts.

- Given history the LLM does naturally apply some of these principles.

-

The gamemaster role is an arbiter; I think there’s another role worth exploring that I might call a “goalmaster”.

- The goalmaster is there to inspire the player to action, to arrange things behind the scenes so that plot moves forward, so that world isn’t arbitrary, revisiting themes, and so on.

- Traditionally the gamemaster does both roles, but separating roles is both easy and desirable with the LLM; each role is just a different prompt.

- It’s less clear to me exactly when the goalmaster is invoked. It feels like it should be more anticipatory than immediate. Some insulation is even preferable, it’s no fun if every interaction feels like it is telegraphing some ultimate purpose. The goalmaster shouldn’t be the hand of fate, it’s more a combination of serendipity and offering opportunities.

- I imagine the goalmaster implanting details, changing character histories, creating and scheduling events, creating secrets that characters can hold onto, influencing conversation initialization, and so on.

- Goalmasters could be specialized to different structures or genres. For instance a murder mystery or progressing through the Hero’s Journey. The goalmaster prompts would change, but the interface to the rest of the game could probably stay the same.

-

How direct should the player’s roleplaying be? Should the player be acting as their character (speaking directly as their character), or indirectly (describing broad strokes of what the character should do) and let the game actually run the character?

- While it would be cool to wire the game up to some kind of strength-o-meter, realistically attempts to do physical actions have to be resolved on behalf of the player, not through the player’s direct actions.

- … though arcade games do simulate physicality through dexterity and skill with the controllers.

- If you roleplay directly then how do you play an alien? An ancient Sumerian? Someone much smarter or much dumber than you? Someone charming and attractive or someone repulsive?

- We could allow indirect roleplay (e.g., charm the crowd by rolling the dice) but also if the player is more direct in their roleplay that could give a bonus. So if you describe exactly how you do something, or how you behave, or what you know, then you have a higher likelihood of succeeding.

- Gaining knowledge and ideas for how to do direct roleplay could be supported in the interface via different kinds of help. Especially in the historical roleplay I like the idea of learning history on demand to support your in-game goals. The game as an open book test.

- Maybe during difficulty analysis the LLM could offer bonus points, while still keeping to the simple difficulty levels.

- The game Infinite Worlds is an example of indirect action: from what I can tell it’s basically writing a story, and the player gives notes on direction. Those can be specific or not, but either way it’s all rewritten to create a singular narrative.

-

How an action is described could be a new gameplay element.

- The LLM is deciding what skills come into play for a certain activity. The finer points of how you describe the action can affect that, and thus affect which skills are used and your likelihood of success. “Use subterfuge to pickpocket the guard” vs “make a distraction to pickpocket the guard” vs “pickpocket the guard”.

- These action descriptions also inform how success and failure will be resolved. None of this even requires special implementation, it just happens naturally in the prompts.

-

For social gameplay the player could be very specific about how the character presents themselves. For example if you say “I walk up to the person confidently” that can/should effect the interaction.

- Like action descriptions this will naturally affect the interactions as long as the descriptions aren’t scrubbed from the prompts.

- Players won’t naturally describe things like body language and attitude. How can we solicit that kind of input? How can we prove to the player that it matters?

- Comedy of manners is an interesting genre to imagine (as in A Family Supper).

- This is most salient in environments where there are strong social rules: court intrigue, playing ambassador, a Jane Austen style social gathering, a Babylon 5 style environment. The social rules will be much more interesting and salient if they are articulated as part of the initial world building setup.

-

To what degree should NPCs function similarly to the player character? Specifically, should the NPC perform feats with action resolution?

- The LLM self-censors more easily than a human, and with appropriate prompting the NPC won’t attempt strange actions.

- Surprising actions would require imbuing these characters with additional willpower (through prompts) to make gamemaster mediation of characters more meaningful.

- NPC feats do offer an element of surprise that could be very appealing. Failures are interesting, and the LLM is unlikely to script failures entirely on its own.

- Because everything is normalized to text it’s fairly easy to apply the same rules to players and NPCs. Things like NPC skill levels can be created on demand.

World and Context

-

When considering how to represent any aspect of the world (location descriptions, people’s attitudes, location and connections, etc) there’s a couple implementation options:

- Full code: create data structures around each attribute, allow many aspects to be resolved automatically, create custom prompts to manage and update the structures, and so on. It’s easy to imagine creating this for an inventory system.

- Casual natural language: describe pertinent aspects of the attribute in natural language, using prompts and output templates to guide the LLM towards salience. All resolution is done by the LLM since to the code it’s just a bunch of words. NPC character descriptions fit here. For instance you might care about the social status of the NPC, and want prompts and templates that encourage specifying that social status, but it doesn’t have to be a formal attribute that can be isolated.

- Pile of words: let the attribute be described in general purpose summaries, transcripts, or descriptions. Allow the attribute to be unspecified, or maybe it comes into being when necessary. NPC clothing or attitudes might fit here.

- Hallucinate it all: don’t worry about saving anything, just let the LLM make it up as needed, without even ensuring consistency across time. If the attribute is easy enough to infer from other details then maybe this is fine. This might be sufficient for a room description… at least until the player tries to inspect or interact with the room or its contents.

- Embrace incremental specificity: for many aspects of the world and characters you can imagine going up through this list, beginning with just vibes that allow for LLM imagination/hallucination, then remembering those for later use, then formalizing them when a mechanism asks for a formal value (such as a skill level).

-

Let’s revisit Retrieval Augmented Generation (RAG): retrieving a portion of the game/world/character knowledge and inserting it into the prompt.

- When you include information in a prompt the LLM isn’t forced to make use of that knowledge, but it is available. In a game that can be things like location descriptions, past events, character personalities or factoids, etc.

- My hand-coded prompts are doing a kind of RAG, in that I fetch and insert knowledge I believe will be relevant to the current task.

- In my hand-written prompts I’m not just inserting information, I’m also contextualizing that information. That can be as simple as “The room is described as {room_description}”.

- Context really matters. Especially in a somewhat adversarial situation like a game: it’s quite important that the LLM not confuse the player with another character. This is a common issue and can happen quite often even with a custom prompt.

- RAG is usually imagined as a more automated system: a bag of facts, roughly tagged and labeled, that get inserted into prompts. How they are selected is probably some combination of heuristics, structured search, and fuzzy (embedding) search.

- Determining what facts are memorable is a big task of its own. This should be informed by the game mechanics, so that facts that are likely to impact the gameplay are remembered and other facts allowed to fade into history.

- Some facts should probably be saved in a structured form rather than relying on RAG. For instance if the LLM invents rooms and passageways those would probably best be remembered as a formal series of locations, not put into a pool of memories.

- The intent parsing prompt could easily do the additional work to create RAG-related queries that will support the gamemaster and NPC prompts.

- As memorable facts are created I can imagine preprocessing facts to target them for different contexts. For instance if a character named Jane Doe says “I’m a big fan of chili dogs” then you might index on something like “Jane Doe is a big fan of chili dogs”. But when you use the fact you may combine it into a list of “likes” for the character.

- This preprocessing might also be applicable to other aspects of the prompts. For instance I can imagine a dozen ways you can present the world building background given the needs of a prompt. You could have a very long document describing the world and then distill that down to smaller passages that describe architecture, social mores, a magic system, social movements or tensions, and so on. These wouldn’t have to be retrieved by a RAG/search system, but could be inserted more manually into prompts. Then you can leave out architecture guidance from an NPC character response prompt.

-

Using multiple prompts isn’t just helpful for focusing the instructions and purpose of the prompt, but also for limiting the information.

- Games built on Custom GPTs or as a prompt to ChatGPT are terrible in this regard, not only is everything visible to every interaction, but it’s also all visible to the user.

- When there’s complete information it’s also very hard to separate the narrative goals from specific interactions. If it’s a space adventure then they are always adventuring, there’s no normalcy. When every detail is created in service of the narrative it can feel confining, and details begin to telegraph parts of the plot as you learn everything is created in service to the story.

- Generally I think these separate prompts and limited information gives the player (and potentially NPCs) more true autonomy and surprise.

-

LLMs have some biases and tendencies to repeat themselves. GPT notoriously loves the word “delve”. It’s also partial to the name “Silas”.

- The LLM is much better at diversity of responses when it makes lists instead of coming up with a singular answer. The responses are also more interesting, like lots of little brainstorming sessions.

- I think humans are like this too: we think up lists in our head but only say one item out loud. An LLM can’t think of things privately.

- Maybe a solution here is to have the LLM produce and draw from more lists. For instance you might make a list of possible locations: florist, dilapidated theater, abandoned well, and so on. Give the LLM a shuffled sampling of some of these and let it choose the most appropriate next location (and still let it invent a new type of location if the plot calls for it). If you are building a medieval city this will keep everything from being a tavern, or every building in a wealthy neighborhood from being a mansion.

- In the case of creating things from lists (similar to rolling dice to resolve actions), you need an additional prompting step: a prompt to identify the missing information or action, then code that rolls some dice to sample from a list, and another prompt to handle the result of those rolls.

- But you don’t want to run long chains of large prompts that include all the context. Most of the context really isn’t necessary for these chains. But if you don’t have any context then there’s no reason to use the LLM at all. This is where you want thoughtful but restrained context construction.

-

The world feels much more alive if there’s things happening in the background.

- Running a full “simulate the world in LLM calls” system is expensive and slow and chaotic.

- I can imagine a simpler roll-for-activity, where a setting might have a list of possible background activities that happen and one gets picked randomly. Some might be simple, like someone starts a round of singing. Another might trigger other events, like a fight breaking out.

- In general there’s a pattern of asking the LLM to imagine patterns of behavior or activity instead of imagining activity from scratch at each step. For instance imagining a list of possible locations an NPC might visit.

- The precalculation is also to achieve balance. If you just ask the LLM to invent background activity it’s going to invent the same activity over and over… because why not?

- Generally you have to look at prompts and think: is there enough new information, or novel combinations of information, that would engender a unique and interesting response? If not then either you have to expand the prompt context, or consider other approaches to ensure variety.

- You can also do just-in-time background activity. Like if you speak to a character for a second time, the LLM invents some activity for the character since the last time you spoke.

-

What is the right amount of world setup? That is, how much should the LLM work out on the fly and in response to player actions, and how much should be created ahead of time (maybe still by the LLM)?

- There’s both setup of concrete things (locations, characters, backstory) and potentially setting up the rules themselves.

- That is: when you run much of the game on natural language it’s possible to invent new rules, new facets, new verbs. Maybe you are in a situation where everyone has an allegiance, or a magical power, or secret name. It’s a bit annoying to code this flexibility, but much easier than ever before.

- Allowing the LLM to invent new rules feels chaotic and hard to control when engineering the system, but is awfully fun when playing with the system. Making it work is probably a case of careful interconnected prompting with a smaller and more constant core ruleset.

-

An LLM brings a wide array of knowledge to everything.

- Sometimes that feels like a bug, like asking a peasant to solve an algebra equation (and getting the answer).

- But the LLM can legitimately roleplay not just the personality of a character, but also their knowledge. In one session my daughter encountered a Professor of Astrology, and quite enjoyed learning actual astrology from her.

- The LLM can also speak other languages, critique etiquette, and all kinds of other peculiar skills. Bringing those skills out in the characters feels productive.

- The LLM also gets things wrong but that feels less concerning in a game.

-

I’ve thought a lot about MUDs and multi-user worlds. But only in vague pie-in-the-sky ways…

- In general it seems really compelling if a player can implicitly or explicitly create the world. But it’s not very interesting unless the player can share the result.

- A lot of the action resolution and other fairly fuzzy techniques are not well attached to a clock. If you have multiple synchronous players then everything has to happen on a central clock.

- There’s also lots of race conditions if you allow players to make “big” moves. What if I setup a bank heist that takes weeks of game time to put together, and another person just does a stick-up job? How do I split that larger event to include that smaller event? How does real time relate to game time?

- Given the right pretense for the world maybe you can avoid some issues. That is, you can invent a world with particular and maybe peculiar rules that make multi-user play easier.

- Non-synchronous play is also possible, but players never get to meet. But just the evidence that other real people have been in this would make everything feel so much more real.

- Maybe the players only meet in certain places where the rules are different and more compatible with social play. That space might only allow discussion, trading items, etc., but not concurrent interaction with the game world.

- I can imagine something with portals, time warps… with something like a League Of Time Travelers club where people meet.

- Another simpler approach might just be a complete serialization of user’s actions: one user’s action has to be completely resolved before another action by any user will be considered. There are several Discord games that effectively work that way.

- I’ve ignored traditional “safety” concerns about misuse of the LLM or abuse in the world. In a single-player game I feel fine leaving that to the player. But in a multi-player game this would come up quickly.

- There’s also so many ways to prompt hack a game like this. If it’s just you playing in your own game then who cares? If it’s fun then have fun with it, if it’s boring you’ll move on. But with other players this probably won’t do. (Unless it’s a close-knit group.)

Interface and interaction

-

Text input feels pretty weighty, each turn can lead to blank page syndrome.

- In real life you may have experienced the feeling: “I could do anything right now, I don’t have to be anywhere or do anything…” and then you still don’t know what to do with that freedom? That’s how the roleplay often feels, and maybe that’s just too much.

- A common solution is to give multiple choice options. With the LLM an Other option can also be available where the player can enter any text. Is that good enough? You can find these games all over the place but I haven’t fallen in love.

- Every time I consider a simplified interface my mind pushes back because I really want the player to be generative. Multiple choice just doesn’t excite me.

- Maybe I’m wrong. Multiple choice appears to be just a tree of choices, but with all the other inputs to the system there’s no real chance of repeatability. And if you start blending in the player’s (or the player character’s) general attitudes then it feels very bendable.

- Multiple choice isn’t just pre-filled options, it has an effect on the rhythm and pace of the game. The story will be driven by those questions, bouncing from pivotal choice to pivotal choice. Just having an Other option doesn’t let you explore the scenario at different paces or with different focuses.

- Multiple choice isn’t just about restricting answers, it’s about presenting everything as a Choice. That kind of presentation literally doesn’t exist in a chat interface.

-

Learning how to use the game is actually pretty simple!

- I’ve been reading some discussions and commentaries about different MUDs and learnability is a major issue. There’s so many mechanisms. Natural language input really helps!

- Natural language input with LLM resolution is not like a traditional text adventure (e.g., Zork). In a text adventure there’s an underlying finite mechanism, and the parser translates your text into something like underlying commands. The LLM with LLM resolution is not translating your action to an intermediate representation! It is quite hard to enter a command that doesn’t do something.

- In fact, always doing something is itself an issue. One issue LLMs have in general is that when they are asked to perform a task they will perform it, even if the task is underspecified, nonsensical, improbable. There’s ways to mitigate that, but it’s always lurking.

- There is an open question about how to speak to the gamemaster, out of character. An example might be to ask “how hard would it be to …”. Or just to understand the rules or expectations.

-

What rhythm should the game have?

- The most obvious way to do a chat-based game is one long stream of activity.

- In terms of implementation it’s very helpful to have summative moments, when the past is compressed into something smaller and certain effects can be applied. For instance updating “reputation” on a turn-by-turn basis is undesirable: it shouldn’t change that quickly, and should be based on a set of actions rather than any single action.

- Changing location or ending a conversation are obvious moments to do this, but are there others?

- Gameplay also seems like it benefits from moments of reflection and a pause from action. There has to be a moment when you can tell the world has actually changed due to your actions.

-

Instead of a singular game experience I’ve also thought about little mini-games. These might share many UI elements, but be driven by different prompts and have different goals and outcomes. Some possible mini-games:

- Debate: the player debates some issue pertinent to the setting. Setup emphasizes clear conflict and opposing ideas, conclusion is about how convincing the argument was.

- Deceit: conning someone (an NPC). A little like prompt hacking as a game, but “prompt hacking in character”.

- Wandering/exploring: leads to an emphasis on setting. Could be generative itself: wandering as a way to build the world. The player’s gaze literally makes things come into being. This would be much simpler if the player themselves didn’t perform many feats or impactful actions during this wandering.

- Scavenger hunt: like wandering but with a little more purpose. Some kinds of quests are scavenger hunts with the pretense of a plot. The LLM’s ability to judge could make things interesting. For instance: “find a sad person.”

- Day in the life: successfully navigate a normal day for the character. Do not make a fuss, do not attempt crazy things, be the person people expect you to be. It feels a little tedious but depends on the novelty of the character and their life. What’s it like to be a person of a different gender, class, or live in a very different society? Not caricature, just… normal. The patience and credulity of the LLM makes this feasible.

- The hard conversation: a breakup, an intervention, a confrontation, a terrible job interview. Might work best in real-world (including historical) scenarios, though perhaps a veneer of fantasy would soften the scene. Can start with a clear conflict, maybe with a desired outcome.

- Attempt a feat: such as a heist, a jailbreak, etc. Create a plan. Gather your crew. Maybe the actual act doesn’t have to be roleplayed, and instead it’s all setup and then let the gamemaster tell the story of the outcome. The resulting story could be presented as a kind of minute-by-minute timeline.

- Relationship building: ala Stardew Valley, or like the conclusion to Groundhogs Day where you pursue a perfectly benevolent day. Spend your time checking in with folks. Make smalltalk. Setup is arranged to add just a little new flavor each day, some interconnected events and relationships between NPCs, but the action is low and the friendliness is high.

- Unlike a game like Stardew Valley, it’s not just you trying to endear yourself to the NPCs, the NPCs can get to know your character as well.

- I worry the idea of mini-games is a kind of avoidance: unable to create one compelling experience I instead imagine several, none of which exist…

- A positive point: if you have a diverse set of interactions (ala mini-games) it’s very easy to normalize them all to the core game mechanics. All you have to do is present the finished game/interaction/scene textually and have the LLM translate that into effects.

-

Maybe there should be a Jiminy Cricket sort of character, the Honest Advisor/Spirit Advisor. A secret companion that helps out. A voice in the player character’s head. A magical entity.

- Is this just another way to present a help system? To keep the player from getting lost or misunderstanding the goals?

- If it’s more than that, then what is it? Maybe it’s a source of goals.

- One pretense might be that you, the player, has teleported into the consciousness of another person. Quantum Leap-style.

- Or instead of completely replacing that character it’s more consensual or complementary, making that secondary consciousness the companion.

Player goals and motivation

-

Games are more fun and make more sense when there is a goal. Even a vague goal like “level up” or “get lots of money”.

- Everyone wants to know if they are winning! Though I’m not sure that’s true.

- It creates a purpose, a reason to do one thing instead of another thing. Especially if you are pretending to be a person (with an incomplete psyche and history) it helps to have suggestions about what you want.

- Should goals be part of character creation? A character-specific goal rather than a singular goal like “level up”.

- The life simulator creates scenarios which have some direction and purpose, but often two goals. This is because I’ve focused on choices, meaning scenarios where there’s two plausible directions. Maybe that’s bad? Or at least I shouldn’t combine the choice and the scenario roleplay. The result feels mushy.

- If there’s no purpose then people make tension. I think a lot of bad behavior is a kind of acting out. If you don’t have anything to do, any purpose or relationship to the medium, then it’s fun to just stir the pot with inflammatory statements or actions.

-

What kind of game is this really?

- “Roleplaying game” is a really broad category, too broad.

- Journaling games are a genre worth thinking about more deeply. These games are more journey than goal, with a lot of generative aspects, and result in an artifact (the “journal”). They are often one-player tabletop roleplaying games, with lots of rolling to look up effects in tables.

- I think much of the magic of journaling games is the combinatorial results. LLMs are also good at mixing and combining. Like you pick randomly from an event table and get “tragedy”, “politics”, “baking”, all set in London circa 1200AD: “A beloved baker, known for his innovative breads that fed both the rich and poor of London, tragically dies in a suspicious fire during political riots sparked by a royal decree imposing heavy taxes on grain.”

- I don’t really “get” life simulation games. But I haven’t really tried. (What should I try?) The Sims don’t seem quite right, but there’s a ton of mobile games where you “make life choices”.

-

Is building relationships fun? Maybe… it’s an important part of Stardew Valley, for instance. How are they fun?

- Minmaxing relationships makes them feel hollow and like XP grinding. Do you win just by being cloyingly nice to everyone?

- I see potential in giving characters secret backstories. Maybe even an overarching secret, something that you can discover by puzzling together the bits and pieces you get from several characters. This is a good goalmaster task: create the underlying secret, partition it into distinct and incomplete observations or pieces of knowledge, then distribute that to characters.

- It still has to have a conclusion, the secret alone isn’t enough. The secret must do something. Maybe it’s an accusation against a murderer or access to some secret organization. So in addition to establishing the secrets the goalmaster must create the criteria to decide enough of the secret is revealed to unlock something.

- A common relationship building task in regular games is to do quests on behalf of the NPC. While that might be a ok sometimes I think it’s used so heavily because there’s no real relationships to be built with a traditional NPC. But the idea is magnetic, I keep coming back to it even though I don’t find it interesting. Or rather quests are fine, but they aren’t relationship builders.

- Another false path: no riddles. The LLM will love to propose riddles. It knows like 3 riddles. Do not let it make riddles.

- While it is somewhat ethically fraught, I am interested in scenarios where the player “fixes” characters. Helping a despondent character, a self-hating character, a fearful character. And at least it’s less fraught than trying to get the AI to fix the user.

- But you can’t really use this idea of amateur player psychologist without a theory about how the character can be helped. You can’t just set up the psychological issue, you have to offer the LLM a theory of change and have it check in on both the theory and the interactions to see if there’s progress.

- To keep going with this inversion of roles: instead of the AI teaching the user, can the player teach an NPC character? Again this requires very specific prompting, both to create the initial veil of ignorance in the character and to judge how the character learns. But it’s definitely doable. And maybe the result is more educational than having the LLM teach.

-

What can we learn from traditional NPC interactions?

- Normal NPC interactions turn into: does the person like or not like you? Will they tell you a secret? Will they tell you something you can later tell someone else to unlock something (a kind of password). It always comes back to the “core” gameplay. So what’s the core gameplay for this roleplay game?

- There is none! No wonder I’m having a hard time…

- Life simulation games are maybe more about making pivotal choices, but also opening up choices by dialog. For instance, endearing yourself to someone so you can choose a relationship. Often romance…

- Normal NPC interactions turn into: does the person like or not like you? Will they tell you a secret? Will they tell you something you can later tell someone else to unlock something (a kind of password). It always comes back to the “core” gameplay. So what’s the core gameplay for this roleplay game?

-

Games with romantic elements often turn into a scoring game. How many likeability points can you get? This is not a good model of romance.

- Romantic goals powered by an LLM could turn into something like a pickup artist game: can you prompt hack (socially manipulate) someone into liking you?

- Scoring encourages you to just pester someone into liking you, throwing lovebomb shit at the wall. In real life that kind of works… but not that well, and the LLM is probably much more easily hackable in this respect than a real person.

- Pursuing a real romance you can’t just run up the score. If you want to start a relationship with someone it’s helpful if they like you but also requires something qualitatively different than just being continually likable.

- Being relentlessly nice doesn’t create this qualitative shift in a relationship. And just pushing forward will raise a person’s defense mechanisms.

- The LLM can actually do some of this! It can become skeptical and defensive. It can push back against bland niceness.

- Is a relationship shift a kind of feat? Is the feat only accessible when you’ve done some of the score-building legwork?

- Romance feels kind of weird and salacious to include, but it’s one of the big pivotal acts we engage in during our lives, something where we can’t predict the outcome or even understand the pursuit. Romance receives much more attention outside of games as the most common book genre, movie genre, and a constant plot driver in TV.

- Fame could be an alternative to romance with some of the same issues. It feels accessible to scoring but you can’t just score lots of small fame points, lots of little interactions don’t add up to something big… you need dramatic actions. Maybe it just means each “fame point” is really hard to achieve. Maybe that should be a pattern for more activities.

-

The metagame is part of the fun, knowing that you are playing a game that is designed to be played. The idea you can definitely win and that it’s balanced to be challenging before you win.

- Games on rails are generally more balanced. Without rails you might be doing something pointless, exploring something that doesn’t lead anywhere, or pursuing something that is still too hard for you. There’s a comfort to the rail.

- The natural LLM exercise of removing all rails may be too much. But maybe you can build rails Just In Time. The player can jump the rails, but new rails appear.

The medium of gameplay

Everything I’ve talked about is extremely text-heavy, but there we could add to that…

-

Voice output:

- Voice generation is expensive, more expensive than a lot of the LLM calls. It’s oddly expensive.

- Voice generation also adds more latency when it’s chained with other LLM calls. I’ve chosen not to worry much about latency but it feels worse with voice output. Maybe because you wait wait wait, then you have to listen because it comes up all at once. With text you wait, but the text also waits for you.

- But having a variety of voices is pretty fun.

-

Voice input:

- Cheaper than voice output. Also feels odd.

- There’s lots of technical bits to get it right. Handling voice input, turn taking, activation, endpointing, etc. It’s really hard. I’ve done it many times and it’s still really hard. But simple push-to-talk is not very hard. Push to talk works better now that new voice recognition systems like Whisper are high enough quality that record-and-send is feasible without any verification/editing.

- Does voice input change how the interaction feels? It can invite longer, less carefully composed inputs. (The LLM can handle long inputs well!) Does the lack of editing help or hurt?

- I’ve been playing Suck Up which is a game that uses voice as its primary input (and an LLM).

- That game gives a limited time for input. That’s not exactly necessary, but maybe it’s good to both avoid something like a stuck button, and to generally encourage turn-taking without long soliloquies.

- When I first tried Suck Up I was someplace I couldn’t freely speak and tried the alternate keyboard input. It wasn’t very fun! It felt burdensome.

-

Image generation:

- Custom GPT games frequently use Dall-E image generation. I generally haven’t enjoyed those games (they tease but never deliver), but I also haven’t been impressed with the image generation.

- There’s something about the fully hallucinated but also throw-away images that feel trivial and untrustworthy.

- Also I just don’t like Dall-E. Its indirect prompting makes it hard to control. This doesn’t lead to chaos, but instead to laziness: because prompting effort isn’t rewarded, people let it produce its default style which has become tedious and noisy. Midjourney’s default style isn’t particularly better, but people don’t stick to Midjourney’s default style.

- Just generally images are slow and expensive to create. One-off images are hard to justify. Persistent images in a persistent world are more reasonable.

- I can imagine a shared-world game where some players contribute a lot of images that they make semi-manually (e.g. with Midjourney). The system could show entities missing pictures and offer a prompt, and a player could go off and do their thing and come back with an image. I’m pretty sure for some players this would be a relaxing and rewarding activity.

-

How can we make the setting and characters feel more embodied? There’s a sameness in the text that can become tedious.

- In my historical experiment I made ~100 tiled backgrounds representing different settings (a hut, a yurt, a dungeon, an apartment, etc). I give the LLM the list and ask it to pull out the most fitting background. This doesn’t require live generation, but still makes the setting feel more custom.

- It’s interesting to think about background noise being selected in the same way. A site like ambient-mixer.com shows how you can use simple mixing to create a wide variety of soundscapes.

-

Another option for a more embodied world generation is to have the LLM create structure, but instantiate that via a more traditional generative algorithm.

- For instance an LLM could define a room size, floor type, furniture, etc., but an algorithm could turn that into an actual game map.

- Looking through other procedural generation work would give other ideas for how LLMs could give parameters that algorithms actually fill out.

Conclusion

Thanks for coming along. I’m not sure what I’m going to do next with this stuff, neither the details nor the broad directions.

What examples are there of games exploring these directions? Where are people discussing these ideas seriously?

Comments encouraged on Mastodon, Threads, or reluctantly Twitter.